Opera Will Let Users Run LLMs Locally

Opera introduces a new feature that will allow users to download and use Large Language Models (LLMs) locally on their computers introduced. It should be noted that the feature is available to Opera One users who receive developer feed updates. Thanks to this, users will have access to more than 150 models from more than 50 families, including Llama from Meta, Gemma from Google, Vicuna, Mistral AI and more.

Opera calls these new features part of its “AI Feature Downgrade Program” and underlines that user data will be kept locally on their devices, allowing them to use productive AI without needing to send information to a server. The company uses the Ollama open source framework to run these models on users’ computers. Each type of model has 2-10 GB of space in the local system.

Let us remind you that with the launch of Opera One last year, the company made it clear that it was trying to become an AI-centric flagship browser and introduced the AI assistant Aria.

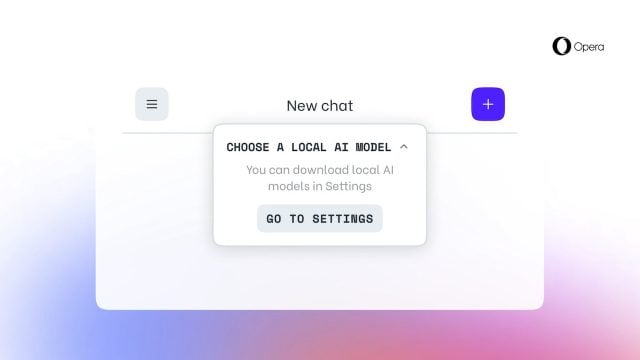

To try this new feature in Opera, you can upgrade to the latest version of Opera Developer and enable native LLMs on your computer by following the steps below.