Artificial Intelligence Support in Hardware: What is NPU and What Does It Do?

We have entered the age of artificial intelligence very quickly. The world of artificial intelligence, which has begun to touch our lives in every field, makes things easier in some areas and increases productivity, continues to progress. And at the speed of light. As always, the giant players of the technology arena are focusing on AI technologies to stand out and keep up with innovations. Companies such as Intel, AMD and Qualcomm have already started to take steps on the hardware side. We will take a closer look at the special AI unit that you are just starting to hear about today and will hear about more often in the future: NPU (Neural Processing Unit). Processing Unit).

With the launch of NPU, which stands for neural processing unit, it is aimed to accelerate artificial intelligence tasks. Apple has actually been using NPUs in its chips for years, so this is nothing new. However, with all brands adopting artificial intelligence processes and artificial intelligence tools becoming widespread, a new trend has begun.

If you have been looking at hardware manufacturers in recent months, you may have seen that they are talking about the chips we call “NPU”. Until now, when buying a computer, you only checked the CPU. Now we can say that Intel and AMD are making things a little more complicated with their artificial intelligence-supported processors. So now there may be another factor you need to pay attention to.

New generation laptops are now starting to come with NPU. Since we are just at the beginning, things are not very complicated, but in the future we will see these AI units develop and gain more functions. We wanted to inform you early. What is this NPU?

Why Do We Need NPU?

Let’s give a summary from the beginning. Vehicles that use artificial intelligence also need workforce. There are different requirements and calculation needs depending on the sector, area of use and software. With the increasing demand for productive artificial intelligence use cases, there is a need for a renewed computing architecture specifically designed for artificial intelligence.

Aside from the central processing unit (CPU) and graphics processing unit (GPU), chips called neural processing units (NPU) were designed from scratch for all artificial intelligence tasks. When a suitable processor is used with an NPU, new and advanced productive artificial intelligence experiences can be used, and the performance and efficiency of the applications used are maximized. In addition, while power consumption decreases in this process, battery life is also positively affected.

What is NPU?

At its core, the NPU is a special processor designed to execute machine learning algorithms. Unlike traditional CPUs and GPUs, NPUs are optimized to carry out complex mathematical calculations, which are an integral part of artificial neural networks. Specially designed neural processors can do an excellent job of processing large amounts of data in parallel. In this way, image recognition, natural language processing and other tasks related to artificial intelligence can be handled much more easily. For example, if a GPU were integrated within the GPU, the NPU could be responsible for a specific task, such as object detection or image acceleration.

Designed to accelerate neural network operations and artificial intelligence tasks, the Neural Processing Unit is integrated into CPUs and SoCs rather than being separate. Unlike CPUs and GPUs, NPUs are optimized for data-oriented parallel computing. Besides a large number of tasks, it is quite efficient in processing large multimedia data such as videos and images and processing data for neural networks. It will be very useful especially in photo/video editing processes such as speech recognition, background blurring and object detection in video calls.

NPU is also an integrated circuit, but it is different from single-function ASICs (Application Specific Integrated Circuits). While ASICs are designed for a single purpose (like bitcoin mining), NPUs offer greater complexity and flexibility, able to meet the diverse demands of network computing. This becomes possible through custom programming in software or hardware tailored to the unique requirements of neural network calculations.

Even though most consumers are not aware of it, they will soon have artificial intelligence support on their computers. Often NPUs will be integrated into the CPU, as in the Intel Core/Core Ultra series or the new AMD Ryzen 8040 series laptop processors. However, in larger data centers or more specialized industrial operations, it may be possible to see the NPU in a different format. We can even see it as a completely separate processor on the motherboard, separate from other processing units.

NPU was built from the ground up to accelerate low-power AI inference. Artificial intelligence workloads consist primarily of calculating neural network layers consisting of scalar, vector and tensor mathematics, followed by a non-linear activation function. With an advanced NPU design, it is possible to overcome all demanding workloads.

NPU, GPU and CPU: They Are All Different

While GPUs are proficient at parallel processing and are often used in machine learning, NPUs take this particular expertise one step further. GPUs are versatile and unique in handling tasks in parallel as well as graphics processing. CPUs used for general purposes are the brain of a computer and perform a wide variety of tasks.

NPUs, on the other hand, were produced specifically to accelerate deep learning algorithms, adapted to carry out specific processes required for neural networks. Since it is focused on specific tasks and special optimizations are made, it is significantly more powerful for AI workloads compared to CPUs or even GPUs in certain scenarios. They provide higher performance.

In fact, graphics chips are quite successful in artificial intelligence. Many artificial intelligence and machine learning tasks are being offloaded to GPUs. However, there is an important distinction between GPU and NPU. While GPUs are known for their parallel computing capabilities, specialized integrated circuits are needed to effectively handle machine learning workloads. GPUs aren’t perfect for doing this beyond graphics processing. Yes it is successful, but not enough. As most of you know, the Tensor Cores in NVIDIA graphics cards are capable in this regard. On the other hand, AMD and Intel have also integrated these circuits into their GPUs. The aim was to perform resolution upgrading operations, which is a very common artificial intelligence workload.

NPUs remove these circuits (which perform a number of other operations) from a GPU, so to speak, and make it a dedicated unit in its own right. Ultimately, tasks for artificial intelligence can be performed more efficiently at a lower power level. We can say that it is a much more useful development for laptops.

GPNPU: Fusion of GPU and NPU

A concept called GPNPU (GPU-NPU hybrid) has also emerged, aiming to combine the strengths of GPUs and NPUs. GPNPUs can leverage the NPU architecture to accelerate AI-centric tasks while also taking advantage of the parallel processing capabilities of GPUs. This combination within a single chip. It aims to strike a balance between versatility and specialized AI processing by meeting diverse computing needs.

Heterogeneous Architecture

It is necessary to have various processors to meet the various criteria and computational demands of artificial intelligence. Using artificial intelligence-centered specially designed processors with a central processing unit (CPU) and graphics processing unit (GPU), each working in separate business areas, is possible with a heterogeneous computing architecture that uses processing diversity. n is happening. For example, the central processing unit (CPU) is responsible for sequential control and instantaneous processing, the graphics processing unit (GPU) is responsible for parallel data streaming, and the neural processing unit (NPU) is responsible for scalar, vector, and tensor processing. Responsible for basic AI tasks including arithmetic. Heterogeneous computing increases application performance, device thermal efficiency, and battery life to improve end-users’ experiences with generative AI.

What is the Role of NPUs?

Even though it is not valid for every system, we can describe the NPU as a coprocessor. NPUs are designed to complement the functions of CPUs and GPUs. While CPUs handle a wide variety of tasks and GPUs excel at rendering detailed graphics, NPUs perform AI-driven tasks quickly. Expert in refutation. In this way, different workloads throughout the system are distributed to separate areas, and the GPU and CPU do not use their capacity to the fullest while dealing with different workloads.

For example, in video calls, an NPU can efficiently handle the task of blurring the background, freeing the GPU to focus on more intensive tasks. Similarly, in photo or video editing, NPUs can perform object detection and other AI-related operations, improving the overall efficiency of the workflow.

Machine Learning (ML) Algorithms

Machine learning algorithms form the backbone of artificial intelligence applications. Although often confused with artificial intelligence, machine learning can be viewed as a type of artificial intelligence. These algorithms learn from data obtained through data models, make predictions and make decisions without explicit programming. There are four types of machine learning algorithms: supervised, semi-supervised, unsupervised, and reinforcement. NPUs execute these algorithms efficiently, performing tasks such as training and inference, where large data sets are processed to improve models and make real-time predictions. It plays an important role in the .

NPUs Will Be More Important in the Future

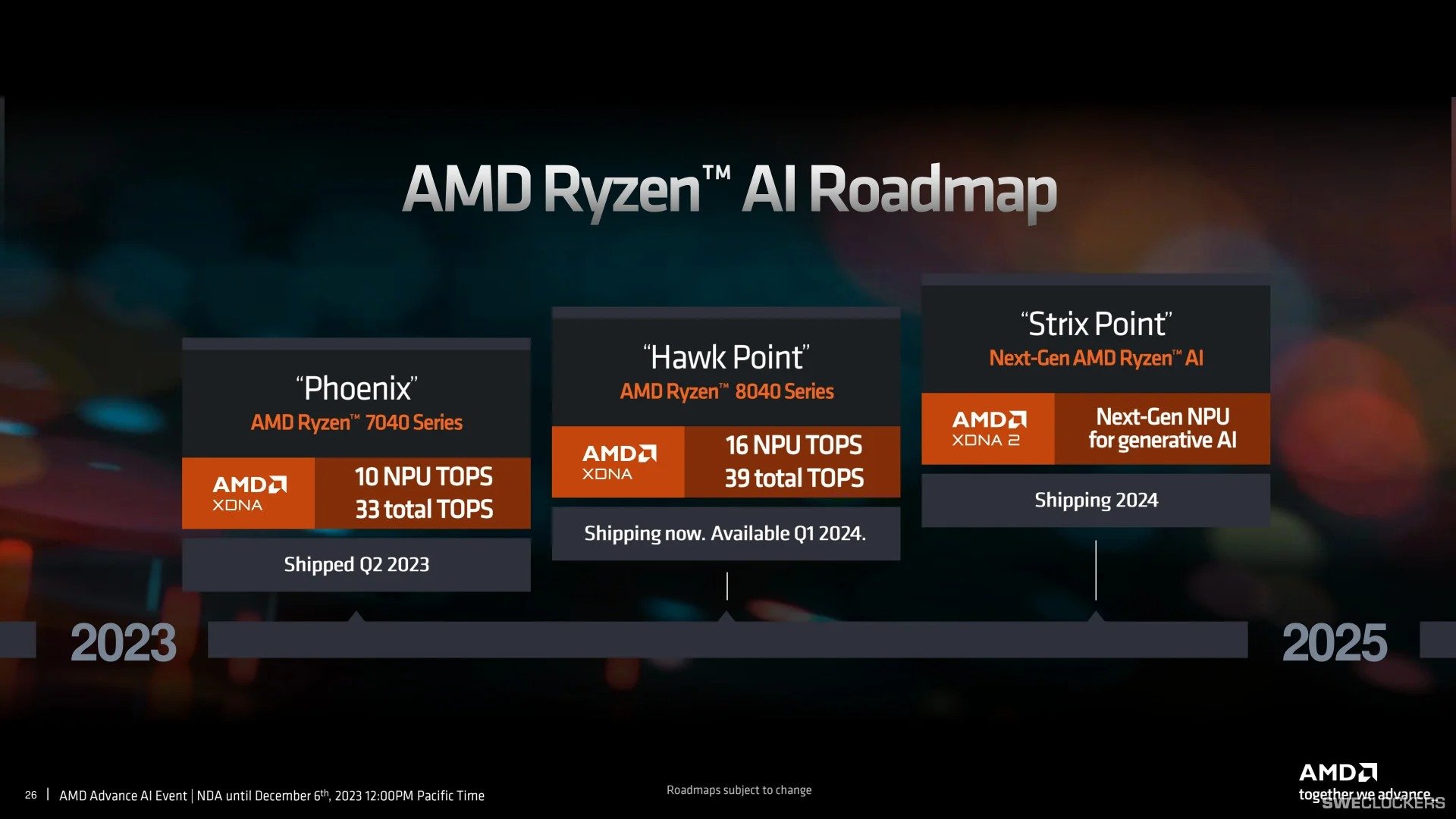

NPUs started to spread as of 2024, and perhaps every processor will contain NPUs in the future. Intel took its first step with Meteor Lake chips. AMD lit the fuse with its Ryzen 8040 mobile processors.

Inevitably, the demand for artificial intelligence-focused applications, where NPUs are at the forefront, will continue to increase. In this way, the native processes will become more important. The combination of GPNPUs and advances in machine learning algorithms will undoubtedly drive advancements we have never seen before, driving technology forward and reshaping our digital environment.

At this moment NPU may seem insignificant in your eyes, this is normal. However, in the future, artificial intelligence functions will increasingly enter every operating system and application. Thus, the processing units we are talking about will become more important.

Artificial Intelligence Supported Computers Are Spreading

There are requirements that must be met for a computer to be considered ‘AI-powered’. Microsoft said that the NPU in AI computers should offer a minimum performance of 45 TOPS. Current generation chips from AMD and Intel cannot currently meet this requirement.

On the desktop side, Intel currently does not have processors with NPUs, so the only option is AMD. Intel, which appears in the mobile field with Meteor Lake processors, offers rival options to AMD’s Ryzen 8040 (Hawk Point) processors.

Remarkably, neither AMD nor Intel’s chips can meet Microsoft’s performance requirements of 45 TOPS. However, both companies say that they will meet these demands with their new generation processors codenamed Strix Point and Lunar Lake. The need, set at 45 TOPS, is to enable Microsoft Copilot to run AI elements natively. However, it is unknown how a future Windows update that will enable this functionality will fare on current AMD and Intel processor generations. Qualcomm’s Snapdragon X Elite Arm chips will be released in the middle of the year with 45 TOPS NPU performance. Apple’s M3 processors offer 18 TOPS NPU performance, but Apple’s side has nothing to do with Microsoft’s requirements.

Hardware support for artificial intelligence workloads helps alleviate critical privacy concerns in the commercial field. At the same time, latency, performance and battery life advantages are also offered for AI applications. As a side note, power efficiency can be achieved when an AI task is run on an NPU instead of a GPU.

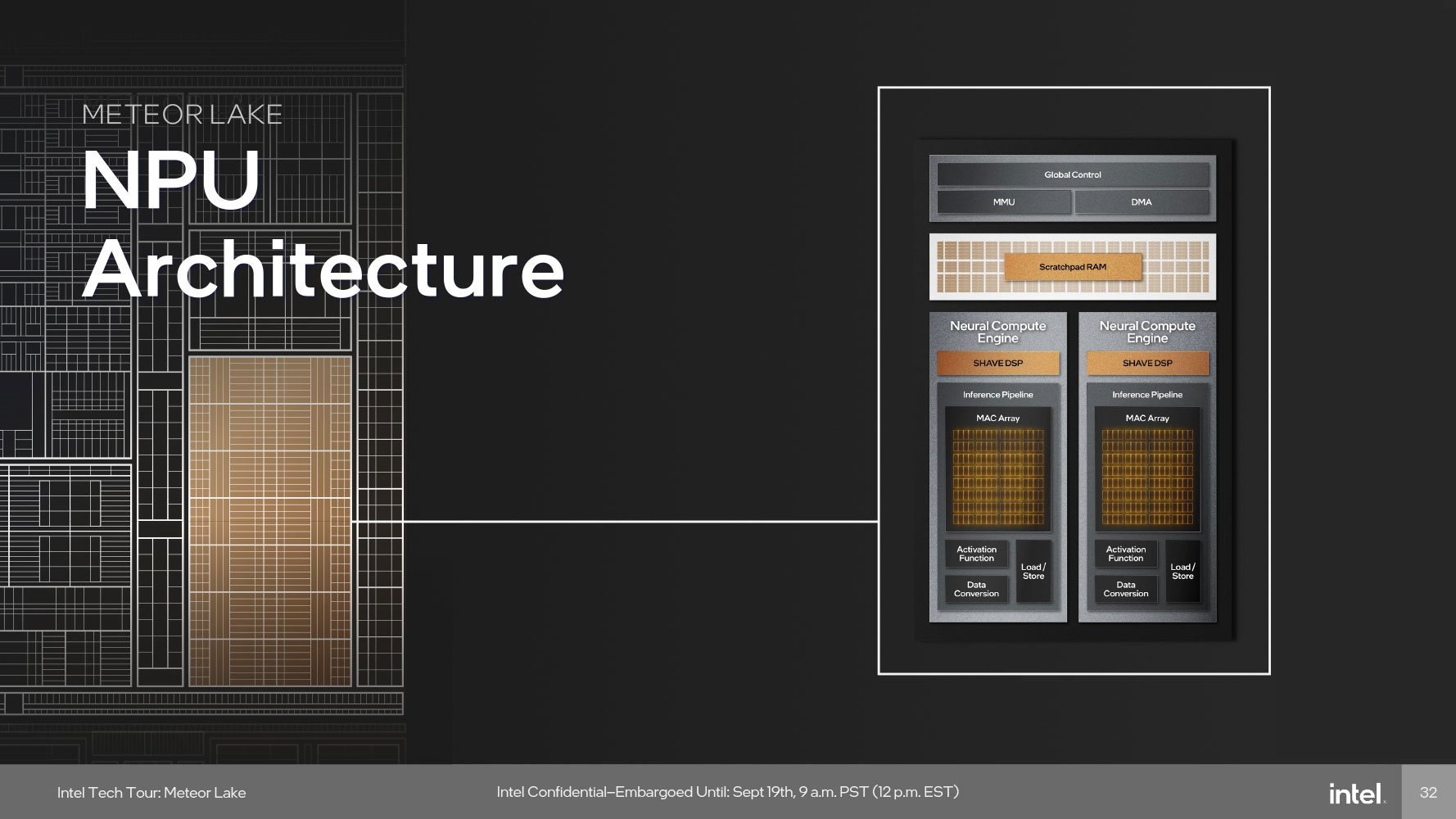

Intel Meteor Lake

Intel started a trend it called ‘AI PC’. Meteor Lake processors produced with Intel 4 (7nm) technology adopt Intel’s Foveros 3D hybrid design. We’re also looking at the first Intel processors to use neural processing units, or NPUs. The company had claimed that the NPU (Apple, Qualcomm and AMD have their own dedicated NPUs) would usher in the “artificial intelligence PC” era.

Meteor Lake will use processors called NPUs for the first time for power-efficient AI computing, along with AI capabilities built into all computing engines. In this way, artificial intelligence tasks carried out by users in some software will be accelerated. In fact, artificial intelligence is not only linked to the NPU, the GPU and CPU are also optimized for AI workloads. However, NPU was prepared to focus only on artificial intelligence tasks:

- GPU: The built-in GPU has performance parallelism and efficiency that is ideal for media, 3D applications and artificial intelligence in the rendering pipeline.

- NPU: NPU (neural processing unit) comes as a low-power AI engine specially designed for continuous AI and AI transfer.

- CPU: The CPU is lightweight, single-inference, and has fast response times, ideally suited for low-latency AI tasks.

Lunar Lake is on the way

Intel introduced the Lunar Lake processors that will power future computers at the Vision 2024 event. These processors use Intel Core Ultra 200V, which is part of the Intel Core Ultra 2 series, promising significant improvements in artificial intelligence calculation speed compared to the Core Ultra 1 series. At the Vision 2024 event, Intel CEO Pat Gelsinger said that Lunar Lake processors will deliver a three-fold increase in AI performance and exceed the 100 TOPS limit in total processing power. He emphasized that he could.

AMD Ryzen 8040 “Hawk Pointâ€

While talking about leaks regarding Windows 12, we also mentioned hardware-side artificial intelligence solutions. AMD’s XDNA chips are also only for AI applications. Companies like AMD are also focusing on increasing artificial intelligence performance with hardware innovations. Alongside the XDNA 2 NPU, the chipmaker also introduced an application that allows enthusiasts and developers to deploy pre-trained AI models running on the XDNA AI engine Ä ±: Ryzen AI Software.

AMD points to improved NPU performance as one of the key selling points of the 8040 series. While the XDNA engine provided 10 TOPS performance in the previous 7040 models, this value increased to 16 TOPS with the 8040.

All five Ryzen 8040HS mobile chips include the XDNA-based NPU designed for artificial intelligence, more commonly known as ‘Ryzen AI’. We should point out that technically all of AMD’s Ryzen 7040 Series (Phoenix) CPUs have the NPU physically, but the company does not enable it in most models.

To remind you again, NPU is not a new hardware: It uses Xilinx IP, which AMD acquired with its acquisition of Xilinix. As vendors, manufacturers, software developers, and the entire world embrace the valuable benefits and capabilities that AI can provide in the future, AMD continues to integrate AI capabilities directly into processor silicones. .

AMD is Preparing Strix Point

Mobile chips codenamed “Strix Point” were also mentioned during the launch. Strix Point processors, which are expected to be released in 2024, will offer up to three times more artificial intelligence performance thanks to the new XDNA 2 NPU artificial intelligence engine. Long story short, 2024 and 2025 will be a year full of artificial intelligence.

AMD stated that the upcoming Strix Point APU series will be supported by the new XDNA2 AI architecture. This architecture is expected to provide up to three times the performance increase compared to the Hawk Point XDNA AI architecture.

AMD stated that the upcoming Strix Point APU series will be supported by the new XDNA2 AI architecture. This architecture is expected to provide up to three times the performance increase compared to the Hawk Point XDNA AI architecture.

ARM PCs: Qualcomm Snapdragon X Elite

Qualcomm will launch X Elite chips with 45 TOPS performance in late 2024. The company had published a short video showing that the Snapdragon X Elite laptop outperforms the Intel Core Ultra 7 laptop by up to 10 times in AI workload. :

As you can see, the competition is not only between AMD and Intel. According to the video description, the unnamed Snapdragon X Elite model offers “a breakthrough 45 TOPS NPU that delivers unique artificial intelligence capabilities.” NPU tests performed by Qualcomm include Stable Diffusion and AI rendering in GIMP. By the way, onboard processors were used in the tests instead of the cloud.

It’s no surprise that the upcoming Qualcomm chip has such an edge in the NPU test. Snapdragon X Elite can officially deliver 45 TOPS of performance to tackle AI workloads. Intel’s Core Ultra series can reach “34 TOPS” in the best scenario.

As we mentioned, Meteor Lake was Intel’s first processor family with an integrated NPU, and with the new generation Arrow Lake (desktop) and Lunar Lake (laptop), performance will be taken to the next level.

Microsoft Copilot Will Soon Run Natively on PCs

Let’s add that Microsoft’s Copilot AI service will soon run locally on PCs instead of in the cloud. For this, the technology giant will require a minimum performance requirement of 40 TOPS in CPUs. According to the new definition made by Microsoft and Intel, an artificial intelligence computer will have NPU, CPU, GPU, Microsoft’s Copilot and a physical Copilot key directly on the keyboard. PCs that meet the requirements are already available, but this is only the first wave of the AI PC initiative.

Todd Lewellen, Vice President of Intel’s Client Computing Group, said the following on the subject:

“There will be a continuation or evolution and then we will move to the next generation of AI computer with 40 TOPS requirement on NPU. We have a new generation product that will be in this category.

And when we move to the next generation, they expect us to run more stuff locally, just like they’ll run Copilot with more Copilot stuff running locally on the client. ± will provide. This may not mean that everything in Copilot runs natively, but you will get many core capabilities that you will see running on NPU.â€

Currently Copilot computation occurs in the cloud, but executing the workload locally will provide latency, performance, and privacy benefits. Lewellen explained that Microsoft is focusing on customer experience with new platforms. Microsoft insists that Copilot run on the NPU rather than the GPU to minimize the impact on battery life.

AI NPUs Can Be Monitored via Windows Task Manager

AMD has confirmed that XDNA NPUs will soon be coming to Windows Task Manager via the Computer Driver Model. Currently Windows 11 can only monitor NPU units on Intel’s new Core Ultra Meteor Lake CPUs, but this will change once updates arrive.

AMD will use Microsoft’s Compute Driver Model (MCDM) to enable Windows 11 to monitor AMD’s XDNA NPU usage. MCDM is part of the Microsoft Windows Display Driver Model (WDDM), designed specifically for compute-only microchip processors such as NPUs. According to AMD, MCDM also allows Windows to manage the NPU, including power management and scheduling similar to the CPU and GPU.

Soon multiple applications will be running on the NPU simultaneously. Thus, the hardware monitoring process through Windows will become more important.