What is ChatGPT-4o and How to Use it? – Technopath

Not a day goes by without another surprising innovation in the world of artificial intelligence. We occasionally talk about AI models such as OpenAI ChatGPT, Google Gemini, xAI Grok and Microsoft Bing. Technology giants are working with all their might to get bigger slices of the cake in the artificial intelligence arena, allocating large budgets to this work. What is clear is that the development of artificial intelligence has begun to become scary, we can no longer predict what may happen in the future. The AI model named “GPT-4o” developed by OpenAI brings brand new things to the table.

GPT-4o, the name of which you will hear more often in the coming days, is the newest and largest language model (LLM) released by OpenAI. GPT-4o is a multi-mode artificial intelligence technology that brings many new features for both paid and free users. Compared to ChatGPT, it can understand questions better and give faster and more logical answers.

There are rivals that pose a threat to OpenAI, such as Meta Llama 3 and Google Gemini. OpenAI, one of the leading artificial intelligence companies, aims to be one step ahead of the game with its latest version.

What is ChatGPT-4o?

OpenAI’s ChatGPT robot has received a major update thanks to the new GPT-4o model. Nicknamed Omni, the model is a truly multi-modal artificial intelligence that can naturally understand text, images, video and audio. It is also much faster and can respond to you very quickly. In this context, times very close to the time it takes for people to understand and respond to something in normal life were achieved.

GPT-4 Turbo operated as the flagship AI model until the release of GPT-4o. This model was only available for ChatGPT Plus subscribers, coming with OpenAI-developed features like private GPTs and live web access. The good news is that GPT-4o has much more advanced features and capabilities. It costs half as much as GPT-4 Turbo and is twice as fast. This is probably why GPT-4o is available to both free and paid users. However, paying users will have five times more usage limits for GPT-4o.

Coming to the main difference with the previous free version of ChatGPT, the new model offers improved reasoning, processing and natural language skills. In short, GPT-4o is “the new flagship artificial intelligence model that can reason on audio, images and text in real time”. In other words, the fastest and most capable version of OpenAI. It does not communicate only with text, it has a multi-modal structure. In other words, while it can accept any combination of text, audio and image as input, it can produce any text, audio and image as output.

The small letter “o” at the end of the nomenclature may have caught your attention. This comes from the word “omni”. The name “omni” means “a step towards much more natural human-computer interaction,” OpenAI said in a blog post.

OpenAI describes its new model as follows:

“GPT-4o is a step towards much more natural human-computer interaction; It accepts any combination of text, audio, image and video as input and can create any text, audio and image combination with the data obtained. Responses to audio inputs in as little as 232 milliseconds, on average in 320 milliseconds. can give; This means a time very close to the human reaction time in a conversation. It matches the performance of GPT-4 Turbo on text in English and code, providing a significant improvement in text in non-English languages, while being much faster and 50% cheaper. Compared to existing models, GPT-4o is especially better at understanding images and sounds.”

Free ChatGPT users will now have access to the following features with GPT-4o:

- Advanced intelligence experience at GPT-4 level.

- You can get answers from both the model and the web.

- You can analyze data and create graphs.

- You can chat about the photos you took.

- You can upload files for help summarizing, writing, or analyzing.

- You can use GPTs and the GPT Store.

- Access to memory feature used for situations requiring constant repetition.

How to Use ChatGPT-4o?

Regardless of paid or free version, the first thing you need to do to use ChatGPT is to log in.

- Open the ChatGPT application on your mobile phone and connect to your account. If you are accessing from a computer https://chat.openai.com Visit .

- If you do not have a membership, you can quickly create a new membership for free and then log in.

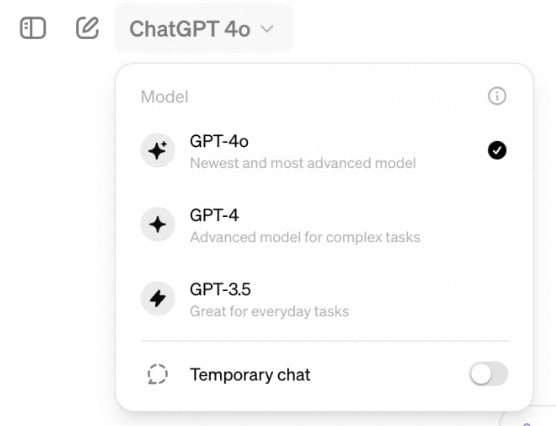

- In the upper left corner, check the drop-down menu for the GPT-4o option, labeled as OpenAI’s “latest and most advanced model.”

- If you have paid usage, the menu will look like this:

![]()

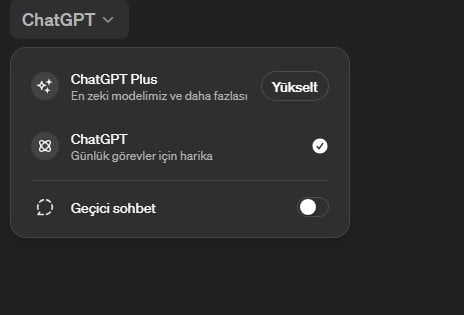

- If you are free to use and the new version is offered to you, you do not need to choose GPT-4o. Free tier users will be automatically assigned to GPT-4o. Let us remind you again that request restrictions may vary depending on current usage and demand. In cases where the GPT-4o limit is reached, free users will be redirected to GPT-3.5 by default. However, the free tier access will allow data analysis, file uploading, browsing, GPTs It comes with limitations on advanced communication features, including exploration and exploitation capabilities.

![]()

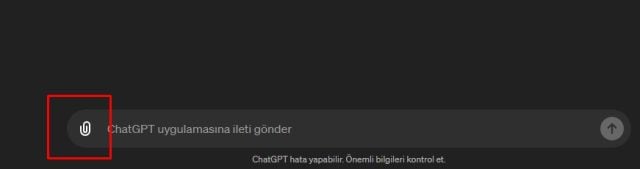

- If you can access GPT-4o and are on the free plan, you will now be able to submit files for analysis. You can send images, videos or even PDFs, and then ask questions about the content.

![]()

Availability and pricing

If you have been using the free version of ChatGPT for a while and want the features that ChatGPT Plus users have, we have good news for you. Now you too can access features such as detecting images, uploading files, finding special GPTs in the GPT Store, saving chats to avoid redoing, analyzing data and performing complex calculations. Users will now be able to run code snippets, analyze images and text files, and use custom GPT chatbots. So the new version can be used completely free of charge.

In summary, with GPT-4o, you will be able to access much more advanced and faster GPT-4 features for free. As you know, millions of people make transactions with these artificial intelligence robots. This means more computing power and more hardware requirements. So how did everything become possible with GPT-4o? GPT-4o is much cheaper computationally to run. This means it requires much less tokens, so a wider user base can benefit from the innovations.

There’s something we need to point out before we finish. People who use it for free will be able to fulfill a limited number of requests during the day. When the limit of requests made with GPT-4o is exceeded, you will automatically switch to the GPT-3.5 model.

OpenAI has said it wants to make its most advanced AI service widely accessible whenever possible. We should point out that this process will not be completed immediately, it will be made available gradually in groups. The problem is that not everything is available right now, not everything is publicly available and you can’t get your name at the top of the list. You just have to wait. Usage is possible for most subscribers, and will gradually become available for free ChatGPT users in the coming weeks. At the time we wrote these words, Omni had just been introduced. In the next period, everything will be accessible.

Much Faster

GPT-4 was different from GPT-3.5 in many ways and speed was one of the differences. The previous version, GPT-4, was much, much slower, despite the development in recent months and the release of GPT-4 Turbo. But with GPT-4o, everything happens almost instantaneously. As a matter of fact, text responses are perceived, processed and put into action much faster. Likewise, the responses to voice conversations are also quite fast.

Response speed may not seem like a game-changing development, but it is very important. If you have made transactions with various artificial intelligence robots, you may see that you wait for a while, and sometimes this time takes quite a long time. With GPT-4o, you get almost instantaneous answers for tasks such as translation and speech assistance.

What Can GPT-4o Do?

The most important detail about GPT-4o is that it is “multimodal”. As we just said, it can work on audio, image, video and text. GPT-4 Turbo was like that too, but there are some differences.

OpenAI says it trains a single neural network on all modalities simultaneously. When using voice mode with the old GPT-4 Turbo, it used a model to convert your spoken words into text. GPT-4 would then interpret and respond to the text, which was then converted back into a synthesized AI voice. With GPT-4o it’s all in one model. OpenAI claims that the response time when talking to GPT-4o is now just a few hundred milliseconds, which is about the same as a real-time conversation with another human.

In addition to being much faster, GPT-4o can now interpret non-verbal elements of speech, such as the tone of your voice. We can say that it can add feeling and emotion to everything.

Voice Communication

There was a previous version of ChatGPT Voice available on the iPhone and Android apps. This feature allowed you to chat with the artificial intelligence relatively naturally, but it did not listen to what you said, instead converting it into text and analyzing it. While the latest iOS update appears to have removed the functionality from the ChatGPT app for some users, OpenAI has stated that the functionality should be present. If you are using the GPT-4 model and not GPT-4o, it is possible to use it actively.

As part of the new model, new features are coming to ChatGPT’s voice mode. The app will be able to act as a Her-like voice assistant that responds in real time and observes the world around you. The current audio mode is more limited, responding to one command at a time and only working with what it can hear.

GPT-4o was built from the ground up to be able to use voice commands and interact with users using voice. This means that GPT-4 can take a sound, convert it to text, respond, and then convert the text response to an audio output. It means that it can reproduce. The artificial intelligence-supported robot can hear and perceive voices and respond in the same way. These answers come so quickly that they are almost human-like.

Not Just a Robot: It Can Sense Emotion

It can also detect unique aspects of the voice such as tone, speed, mood and more. GPT-4o smiles, acts sarcastically, and can recognize when he’s made a mistake. The answers are quite fluent and very close to spoken language. You can also stop talking with your answers at the moment of the conversation.

Simultaneous Translation in 50 Languages

Even better, it can understand different languages and has the ability to translate instantly. So you can use it as a real-time translation tool. During the event, OpenAI official Mira Murati spoke in Italian, and various OpenAI employees spoke in English. The new model was able to successfully translate the sentences and transfer them back to the other party without any delay.

With this success of GPT-4o, translation and language learning platforms may be put to shame. As an example, shares of Duolingo, a well-known language learning platform, immediately began to decline with the announcement.

Interaction, Voiceover and Songs

Our talented robot can sing and even perform a duet with itself. Besides, you can use GPT-4o differently depending on your wishes. Such as preparation for the interview, singing conditions, different voices, creating voice dialogue for a game project, joking and storytelling.

Communication via video

Omni’s capabilities are not limited to sound. It is possible to communicate with video as well as text and images. In a demo, the GPT-4o was shown having a real-time conversation with a human with live video and audio. Just like having a video chat with a human, it seems that GPT-4o can interpret what it sees from the camera and make very sharp inferences. ChatGPT-4o can also retain a much larger number of things than previous models, meaning it can apply its intelligence to much longer conversations and larger amounts of data. For example, if you want to write a novel, Omni is now much more capable.

Enhanced Grip Ability

GPT-4o understands you much better than its predecessors, especially if you talk to it. It is surprising that he can perceive tone and intention much better. If you ask him to be relaxed and friendly, he may start joking around to make the conversation fun.

It takes your intent into consideration much more when analyzing code or text, so it can develop better answers. This way, you can get practical answers to shorter requests. When it comes to perceiving videos and images, it can understand the world around it according to the conditions.

In several demos, OpenAI showed users filming the room they were in and GPT-4o models then describing the room.

What Abilities Does It Have?

There is so much that GPT-4o can give you, so many capabilities it has. Here are some of them:

- Real-time interaction: The GPT-4o model can understand real-time spoken conversations and interpret and respond without any delay.

- Knowledge-based question and answer: As with all previous GPT-4 models, the GPT-4o is trained with a knowledge base and can answer questions.

- Summarizing and creating text: Like all previous GPT-4 models, the GPT-4o can handle common text LLM tasks, including text summarization and rendering.

- Multi-modal reasoning and production: GPT-4o is able to process a combination of data types and develop response by combining text, audio and image in a single model. The model can understand audio, image and text at the same speed. It is also possible to receive responses via audio, video and text.

- Language and voice processing: Omni has advanced capabilities to handle more than 50 different languages.

- Sentiment analysis: The latest version model can detect user sentiment in different modalities of text, audio and video.

- Voice nuance: GPT-4o will be able to produce speech with emotional nuances. This can make it an effective tool for applications that require sensitive and nuanced communication.

- Audio content analysis: GPT-4o is able to generate and understand spoken language, which can be applied in voice-activated systems, audio content analysis, and interactive storytelling.

- Real-time translation. GPT-4o’s multi-modal capabilities offer real-time translation support from one language to another. And in more than 50 languages.

- Understanding and seeing the image: While you can analyze images and videos, it allows users to upload visual content that GPT-4o can understand, explain and provide analysis. tiring.

- Data analysis: Vision and reasoning abilities can enable users to analyze data contained in data tables. GPT-4o will also be able to create data graphs based on analysis or a command.

- File upload: Beyond the information frontier, GPT-4o supports file upload functionality, allowing users to use specific data for analysis.

- Memory and contextual awareness: GPT-4o can remember previous interactions and maintain context over longer conversations.

- Large context window: With a context window that supports up to 128,000 coins, it can maintain consistency over longer conversations or documents, making it suitable for detailed analysis.

- Reduced hallucination and improved safety: The model was designed to minimize the generation of false or misleading information. GPT-4o also includes enhanced security protocols to ensure that output is appropriate and safe for users.

macOS App

Native AI in Windows is currently limited to Copilot. However, macOS users will soon be able to fully use ChatGPT and the new GPT-4o model directly from the desktop. Accessing ChatGPT will become easier with the new native desktop application. The application will be ready primarily for ChatGPT Plus users in the coming days and will be available to free users in the following weeks.

ChatGPT Windows Version

There is currently no Windows version of ChatGPT. As you know, Microsoft offers a tool called Copilot, which is also backed by ChatGPT. However, it looks like OpenAI will release a Windows version, like macOS, towards the end of 2024.

GPT-4 vs GPT-4 Turbo vs GPT-4o

Here are the differences between GPT-4, GPT-4 Turbo and GPT-4o versions:

| GPT-4 | GPT-4 Turbo | GPT-4o | |

| Release Date | March 14, 2023 | November 2023 | 13 May 2024 |

| Context Window | 8,192 coins | 128,000 coins | 128,000 coins |

| Limit Date for Data Access | September 2021 | April 2023 | October 2023 |

| Entry Methods | Text, limited visual processing | Text, images (enhanced) | Text, images, audio (full multimodel capabilities) |

| Vision Abilities | Basis | Improved rendering support via DALL-E 3 | Advanced vision and audio capabilities |

| Multimodel Capabilities | limited | Advanced image and text processing | Full integration of text, images and sound |

| Cost | Standard | Input credits are three times cheaper compared to GPT-4 | 50% cheaper than GPT-4 Turbo |

What is a Large Language Model?

To start with simple terms, a language model is a huge text database that provides logical and human-like answers to the things you ask the AI to do. Robots offered by various companies refer to large models, which we call LLM, to find an answer when we make a request.

LLM is a deep learning algorithm that can recognize, summarize, translate, predict and generate text and other forms of content based on information obtained from massive data sets. In addition to accelerating natural language processing applications such as translation, chatbots, and artificial intelligence assistants, LLMs are used in healthcare, software development, and other applications. It can be used in different ways in many areas.

How do LLMs use these repositories to create their answers? The first step is to analyze the data using a process called deep learning.

We said that large language models are fed by large volumes of data. As the name suggests, at the heart of an LLM is the size of the dataset on which it is trained. However, the definition of “big” is growing with artificial intelligence. Large language models are now typically trained on data sets large enough to include nearly everything written on the Internet over a wide period of time.

Such a large amount of text is fed into the artificial intelligence algorithm using unsupervised learning; At this stage a model is given a set of data without explicit instructions on what to do with it. Thanks to this method, a large language model can learn not only words, but also the relationships between words and the concepts behind them.

We can compare large models to a child starting to talk and learn. Thanks to artificial intelligence algorithms and the information provided, the model is gradually growing. For another example, a person who is fluent in the language he speaks can predict what may come next in a sentence or paragraph. He can even find new words and concepts himself. Likewise, a large language model can use its knowledge to predict and generate content.