How Did GPU Dominate AI and Computing?

Thirty years ago, CPUs and other specialized processors were responsible for most computing tasks. At the time, GPUs were mainly used to speed up drawing 2D shapes in Windows and applications and had no other significant functions. But fast forward to today, GPUs have become one of the most important chips in the technology industry.

Today, GPUs play a crucial role in machine learning and high-performance computing. Their processing power is heavily relied upon. GPUs have almost evolved from graphics craftsmen to professors performing complex mathematical calculations.

In the Beginning, the Flag Was Waving in the Hands of the CPUs

In the late 1990s, CPUs were the dominant force in high-performance computing. It was occasionally used in scientific research requiring supercomputers, and sometimes it performed tasks such as data processing on servers and engineering and design work on workstations. Two types of CPUs were used during this time. The first type consisted of special processors designed for specific purposes. The second type consisted of ready-to-use chips from companies such as AMD, IBM or Intel.

A notable example of powerful computers from this period was the ASCI Red supercomputer produced around 1997. It had a total of 9,632 Intel Pentium II Overdrive CPUs. Each CPU was running at 333 MHz. The theoretical peak computing performance of the entire system was just over 3.2 TFLOPS.

Since we will be talking about TFLOPS frequently in this article, it would be nice if we took some time to explain what it means, but you can learn what it means in detail by accessing the article we have previously explained here. However, to briefly mention, floating points in computer science are data that represent non-integer values, that is, decimal numbers, such as 7.4615 or 0.0143. Integers, or exact values, are frequently used in the calculations needed to control a computer and any software running on it. Floating points, on the other hand, are crucial for situations where precision is crucial, especially anything related to science or engineering.

Even in a simple calculation like determining the circumference of a circle, we use at least one floating point value. For decades, CPUs have had separate circuits for executing logical operations on integers and variables. The Pentium II Overdrive processor we mentioned above can perform one basic floating operation per hour. This is why, in theory, ASCI Red has floating point performance of 9,632 CPU x 333 (MHz) million clock cycles x 1 operation/cycle = 3,207,456 TFLOPS. These figures are based on ideal conditions and can rarely be achieved in real life. But they do provide a good indication of the potential power of the systems.

Meanwhile, other supercomputers from that period had a similar number of standard processors. For example, Blue Pacific at Lawrence Livermore National Laboratory used 5808 IBM’s PowerPC 604e chip, while Blue Mountain at Los Alamos National Laboratory featured 6144 MIPS Technologies R1000. These CPUs were supported by lots of RAM and hard disk storage. The reason why so many CPUs are needed is because supercomputers deal with multidimensional mathematical equations. We often start school with simple one-dimensional equations where everything is represented by a single number, such as distance or time. But when it comes to accurately modeling and simulating real events in the world, more dimensions are needed.

To address these multidimensional equations, mathematics uses vectors, matrices, and tensors. These mathematical entities consist of multiple values grouped together. When a computer performs calculations involving these entities, it must process large numbers of numbers simultaneously. At that time, CPUs could only process one or two floating-point numbers per cycle. Thousands of CPUs had to work together on these calculations to achieve the desired processing power. Thus, we enabled supercomputers to reach the level of performance measured in teraflops.

SIMD Technology Also Joined the Fight: Here Comes MMX, 3DNow! and SSE

In 1997, Intel made an update to its Pentium CPU line by adding a technology called MMX. This update included eight extra register entries in the processor’s core. Each register entry is designed to hold one to four integers. This new system allowed the processor to perform a single instruction on multiple numbers simultaneously. We call this approach SIMD, which stands for Single Instruction Multiple Data.

A year later, AMD released 3DNow! He introduced his own version called. It was thought to be better because its register entries could store floating point values that were decimal numbers. But it took Intel another year to address this limitation in MMX. In the Pentium III they introduced SSE (Stream SIMD Extensions), which allowed the processor to also handle floating point values.

As we entered the 2000s, or the new millennium, computer designers had access to processors that were good at using vector mathematics efficiently. These processors could handle matrices and tensors effectively when used in large numbers. But despite this progress, supercomputers still relied on older or custom chips because these new processors were not specifically designed for such tasks. The same was true of a rapidly popularizing processing unit called the GPU, which was better at performing SIMD work compared to CPUs from AMD or Intel.

In the early days of graphics processors, CPUs and GPUs had different roles in processing graphics. While CPUs focus on calculating the shapes and structures of objects in a 3D scene (a process we call SIMD), GPUs specialize in adding color and texture to individual pixels. At the time, top consumer-grade graphics cards like the 3dfx Voodoo5 5500 and Nvidia GeForce 2 Ultra were excellent at handling SIMD operations. However, graphics cards of that period were mainly optimized for gaming and rendering tasks.

ATI’s $2,000 ATI FireGL 3 graphics card was a graphics card designed to accelerate graphics in programs such as 3D Studio Max and AutoCAD. There were two IBM chips, one for geometry processing (GT1000) and the other for rasterization (RC1000). It also had a large amount of memory (128 MB) and was claimed to have 30 GFLOPS of processing power. During this time GPUs were mostly used for graphics related tasks and not much floating point math was done. Transforming 3D objects into monitor images relied heavily on integer calculations.

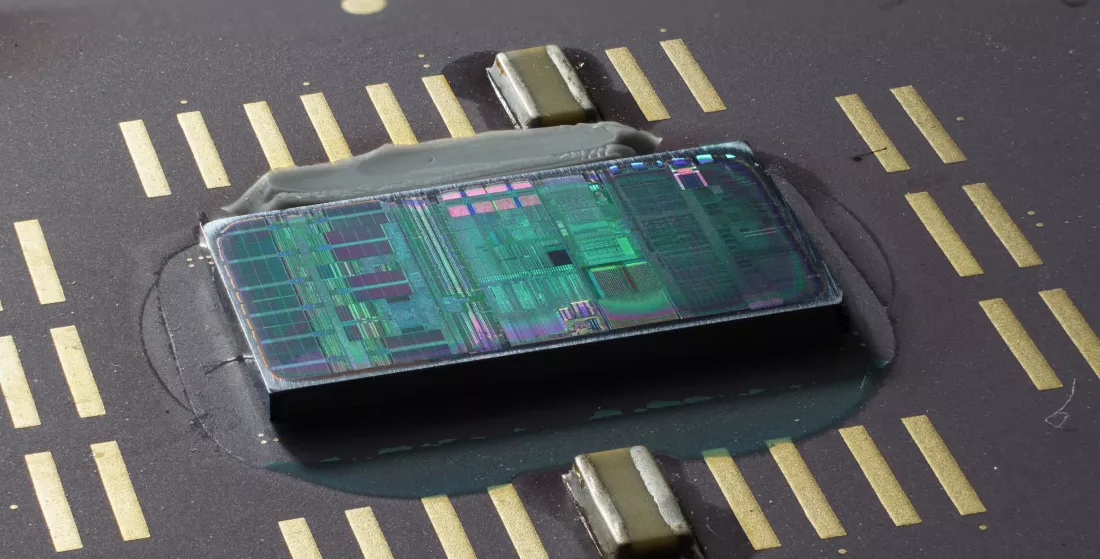

It took several years for video cards to start incorporating floating-point operations into their pipelines. ATI’s R300 processor was one of the first GPUs to handle math at 24-bit floating point precision. However, since the hardware and software focused only on image processing, this power was limited to graphics-related tasks. Computer engineers realized that GPUs had a significant amount of SIMD power, but there was no way to use it in other areas. Surprisingly, a gaming console has provided a solution to this problem, showing how to leverage the power of the GPU for non-graphics applications.

A New Era of Versatile Transaction Begins

Microsoft’s gaming console called Xbox 360 was released in November 2005. The CPU included was designed and manufactured by IBM and used the PowerPC architecture. On the graphics side, there was a GPU designed by ATI and produced by TSMC. What made the Xbox 360 special was the design of its GPU.

Normally GPUs have separate parts called vertex and pixel pipelines to handle different aspects of graphics processing. However, Xbox 360’s GPU, codenamed Xenos, made things different. With an innovative approach, he combined these pipelines into a single layout. This design of the Xenos GPU sparked a new way of thinking about how to build graphics chips. It became a model for future designs and is still used in many modern GPUs today.

Instead of one large processor, there was a group of smaller processors called SIMD arrays. Each array had 16 processors, and each processor had five math units. This design allowed the chip to execute two instructions simultaneously for each thread. This design, known as unified shader architecture, enabled the chip to handle different types of graphics effects. Although it made other parts of the chip more complex, it set a new standard for future designs.

The clock speed of the Xenos chip was 500 MHz, which means it could perform 240 billion calculations per second (GFLOPS) for three threads. To put this into perspective, some of the most powerful supercomputers a decade ago couldn’t keep up this speed. For example, in 1994, a supercomputer called the Aragon XP/S140 had 3,680 CPUs but could only achieve speeds of 184 GFLOPS. Although CPUs had already been using similar smaller processors, namely SIMD arrays, for several years, they were much weaker compared to those on the Xenos chip. The Xenos chip was a significant advancement in graphics processing power and helped push the development of GPUs forward.

When consumer-grade graphics cards started using GPUs with unified shader architecture, it made a huge difference in processing speed compared to the Xbox 360. Although these graphics cards had exorbitant prices, they were quite suitable for high-end scientific computers. But supercomputers around the world continued to run on standard CPUs. It was still a few years before GPUs would make their way into these systems.

Why Stick with CPUs When GPUs Are Better at Processing Speed?

Supercomputers and similar systems are very expensive to build and run. They used to be built with multiple CPUs, so adding another processor wasn’t easy. These systems needed careful planning and testing before increasing the number of chips. Additionally, ensuring that all components, especially software, worked together smoothly was a challenge for GPUs at the time. Although GPUs were highly programmable, their available software was limited. However, this has changed with unified shader architecture GPUs, which allow for more versatile rendering capabilities.

In 2006, ATI and NVIDIA released software toolkits that allowed computers to use their graphics cards for more than graphics. These toolkits, called CTM and CUDA, aimed to unlock the power of graphics cards in scientific and data processing tasks. But what the scientific community really needed was a comprehensive package that could treat CPUs and GPUs as a single entity. This need was met in 2009 with the release of OpenCL, a software platform developed by Apple and later adopted by the Khronos Group. OpenCL has become the standard platform for using GPUs for general-purpose computing outside of the graphics we encounter on a daily basis.

Now GPU Has Also Joined the Computing Race

Although we, as Technopat, have published thousands of reviews to date, there are not many people in the world of technology reviews who test whether supercomputers perform as well as they claim. However, there is a project called TOP500 that has been going on since the early 1990s. It’s run by the University of Mannheim in Germany, and twice a year they publish a list ranking the world’s 10 most powerful supercomputers.

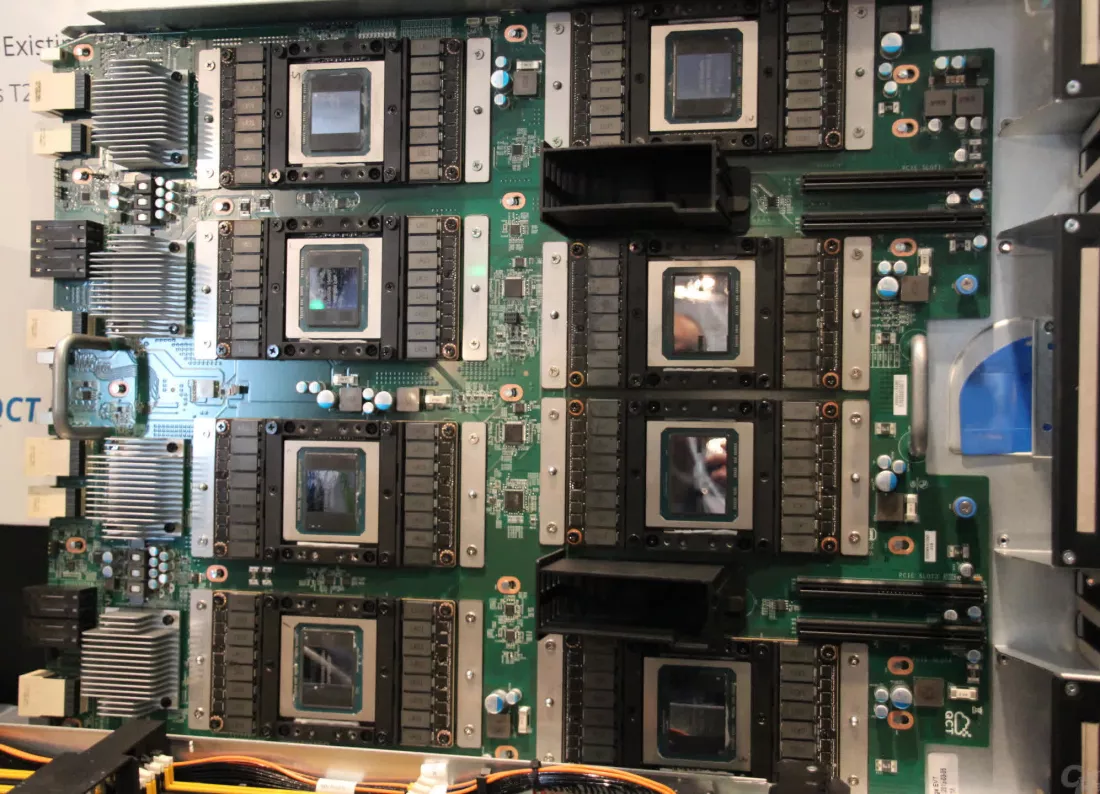

In 2010, the first supercomputers with graphics processing units were included in the list. Two of these systems, called Nebulae and Tianhe-1, were in China. Nebulae used the Tesla C2050 chip, which is similar to NVIDIA’s GeForce GTX 470 graphics card. Tianhe-1, on the other hand, used AMD’s Radeon HD 4870 chip. These GPUs helped increase the computing power of supercomputers, with Nebulae boasting a theoretical peak performance of 2.984 trillion floating point operations per second (TFLOPS).

NVIDIA was the preferred vendor for high-end GPGPU (general-purpose computing on a graphics processing unit) due to its software support (we’re not surprised), even though AMD offers better processing performance. While NVIDIA’s CUDA went through rapid development, AMD had to rely on OpenCL. Intel’s Xeon Phi processor also entered the market as a CPU-GPU hybrid, but NVIDIA Tesla C2050 surpassed it in terms of speed, power consumption and overall superiority.

The competition between AMD, Intel and NVIDIA in the GPGPU field would become iconic over time. Because they are trying to create models with different powers. One model may have more processing cores, another may have a higher clock speed, and another may have a better cache system. GPUs can perform certain tasks faster than a regular CPU due to SIMD performance. But when thousands of CPUs are connected together, they can still be effective, although not as power efficient as GPUs.

CPUs are still important for various types of computing, and many supercomputers use AMD or Intel processors. For example, while the Radeon HD 4870 GPU was used in the Tianhe-1 supercomputer, AMD’s 12-core Opteron 6176 SE CPU was also used. The CPU consumed approximately 140 watts of power and could theoretically reach 220 GFLOPS; The GPU came out on top with 1,200 GFLOPS of performance for just 10 watts of additional power and at a lower cost.

Small but Big-Functional GPU

A few years later, not only supercomputers but also other computers began to use GPUs to perform many calculations simultaneously. NVIDIA stepped up again and introduced the GRID platform, a service that allows virtualization of GPUs for scientific and other purposes. Originally, GRID was created for cloud-based gaming, but this transition turned out to be necessary due to the increasing demand for powerful and affordable GPUs. At NVIDIA’s annual technology conference, they showcased GRID as an important tool for engineers across industries. During the same event, NVIDIA also took a look at the upcoming architecture called Volta. They didn’t give many details, but Volta was expected to be another chip used in all NVIDIA markets.

Meanwhile, AMD was using the Graphics Core Next (GCN) design in server-based cards such as FirePro and Radeon Sky, as well as the gaming-focused Radeon series. FirePro W9100’s peak performance in 32-bit floating point calculations with 5.2 TFLOPS increased the competition. But GPUs had their limitations when it came to high-precision floating point math (FP64 or above). In 2015, there were only a few supercomputers using GPUs compared to those using CPUs. Everything changed in 2016 when NVIDIA introduced the Pascal architecture. It was NVIDIA’s first GPU designed specifically for high-performance computing. A significant improvement in FP64 capabilities has been achieved, with approximately 2,000 cores dedicated to such calculations.

The Tesla P100 was seriously powerful, offering over 9 TFLOPS of FP32 performance and around 4.5 TFLOPS of FP64 performance. Using the Vega 10 chip, the AMD Radeon Pro W9100 was 30% faster at FP32 and 800% slower at FP64. At that time, Intel was considering stopping work on the Xeon Phi processor due to low sales. But a year later, NVIDIA released a new processor called Volta. It showed that NVIDIA was not only interested in the high-performance computing and data processing markets, but was also targeting another market.

Neurons on one side, networks on the other, they are coming

Deep Learning is a part of Machine Learning, a type of Artificial Intelligence. It uses complex mathematical models called neural networks to understand information from data. For example, it can determine the likelihood that an image shows a particular animal. To do this, the model must be trained by showing it many images of that animal and other images that do not show the animal. The mathematics used in deep learning is based on matrix and tensor calculations. In the past, this type of work was only possible with supercomputers, but GPUs have become well-suited for this.

NVIDIA added a feature called tensor cores to its Volta architecture to help it stand out in the deep learning market. These cores were logic units that worked together but had limited capabilities. Using the Volta architecture, the GV100 GPU had 512 tensor cores. Each of these tensor cores could perform 64 matrix operations in one clock cycle. Depending on the size of the matrices and the type of numbers used, the Tesla V100 card could theoretically achieve a performance of 125 TFLOPS per second in these tensor calculations.

Initially, Volta was used mostly in niche markets such as supercomputers. However, NVIDIA later added tensor cores to its consumer products and developed a technology called DLSS (Deep Learning Super Sampling) that uses these cores to improve image quality. This move helped NVIDIA dominate the GPU-accelerated deep learning market for a while.

As a result, NVIDIA’s data center division experienced significant revenue growth. Revenues increased 145% in fiscal 2017, followed by a 133% increase in fiscal 2018 and a 52% increase in fiscal 2019. At the end of the fiscal year, sales for high-performance computing (HPC), deep learning and other areas reached $2.9 billion. But then the market for Nvidia’s artificial intelligence initiatives exploded. In the last quarter of 2023, the company’s total earnings reached $22.1 billion, a significant increase of 265% compared to the previous year. Much of this growth came from NVIDIA’s AI-related initiatives, generating $18.4 billion in revenue.

As you are familiar with, competition is natural when money is involved. NVIDIA is currently the leading provider of graphics processing units, but other major technology companies are also making headway. Google, for example, has developed its own tensor processing chips and made them available through a cloud service. Amazon followed suit with its custom CPU called AWS Graviton. On the other hand, AMD has restructured its GPU division into two product lines, one for gaming (RDNA) and the other for computing (CDNA).

Which Castle Will the GPU Conquer Next?

The improvements will likely consist of improving performance, efficiency, and special features like ray tracing. As technology advances, we will see advances in areas such as artificial intelligence and machine learning, virtual reality, augmented reality, and other computationally intensive applications that can take advantage of the parallel processing capabilities of GPUs.

Their basic design has reached the point where they are highly optimized and will not change much in the future. Any improvements will depend on advances in how these chips are made using semiconductor manufacturing techniques. To make GPUs better, they will either add more logic units or make them run at a higher clock speed.

While there have been a few examples of new features being included throughout the history of GPUs, one of the most important changes has to be the transition to unified shader architecture. This architecture allowed GPUs to process different types of calculations more efficiently. Therefore, if there is to be a change, the focus of this change will be on how these GPUs are used in different applications and scenarios.

That’s how GPUs evolved from gaming use to universal accelerators for workstations, servers and supercomputers around the world. They are used by millions of people every day on computers, phones, TVs and streaming devices, as well as services such as voice and image recognition and music and video recommendations. GPUs will remain the main tool for computing and AI for a long time.