What is Large Language Model (LLM)?

The era of artificial intelligence started a few years ago here and there, and everything is moving much faster than expected. We see different tools that benefit from artificial intelligence every day, and we will continue to do so. AI-supported solutions have now begun to make themselves felt in all kinds of sectors. So what’s behind these solutions? Large Language Models, or in other words, Large Language Models (LLMs).

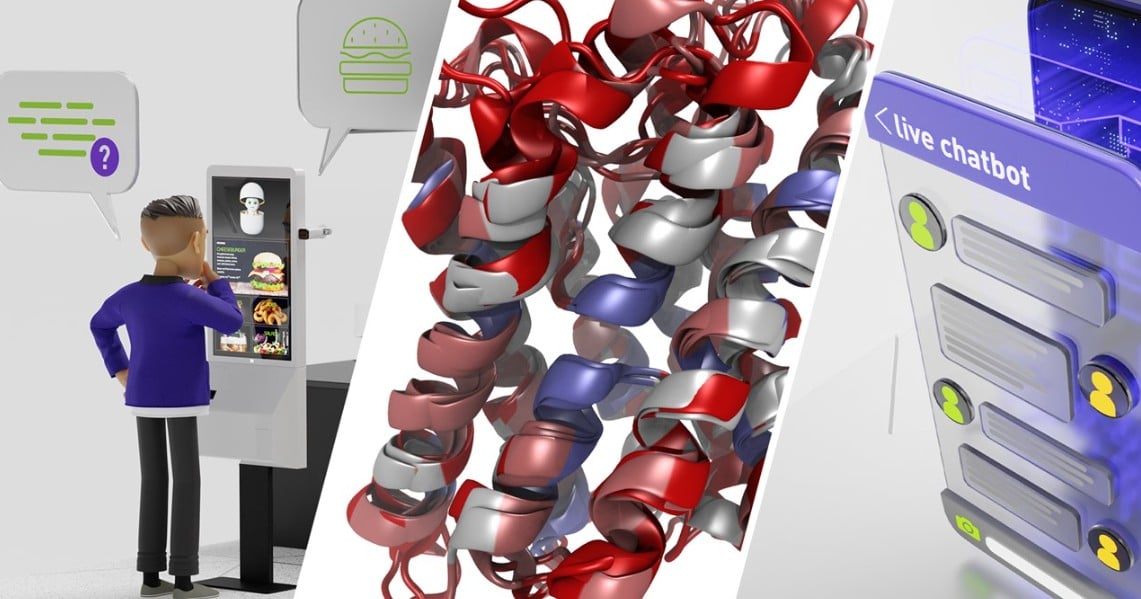

Large language models are the underlying technology powering the rapid rise of generative AI chatbots. Tools like ChatGPT, Google Bard, and Bing Chat all leverage LLMs to generate human-like responses to your prompts and questions. We can even say that if there were no language models, there would be no comprehensive artificial intelligence tools.

We wanted to take a closer look at this term, which you will hear more often in the future. What exactly are language models, abbreviated as LLM, and how do they work?

What is a Large Language Model?

To start with, a language model is a huge text database that provides logical, human-like answers to the things you ask the AI to do. Robots offered by various companies use large models, which we call LLM, to find an answer when we make a request.

LLM is a deep learning algorithm that can recognize, summarize, translate, predict and generate text and other forms of content based on information from massive data sets. In addition to accelerating natural language processing applications such as translation, chatbots, and AI assistants, LLMs can be used in different ways in healthcare, software development, and many other fields.

The text comes from a variety of sources and can run into billions of words. Common text data sources used are:

- Literature: LLMs often include a high amount of contemporary and classical literature. This includes books, poetry and plays.

- Online contents: An LLM often has a large repository of online content, including blogs, web content, forum Q&As, and other online text.

- News and current events: Some, but not all, LLMs have access to current news topics. Some large language models, such as GPT-3.5, are restricted in this sense.

- Social media: Social media represents a huge source of natural language. LLMs draw on texts from major platforms such as Facebook, Twitter and Instagram.

Having a large and rich text database is a good thing, of course, but LLMs need to be trained to make sense of it to produce human-like responses. In other words, we can say that this data is useless on its own without artificial intelligence training.

How Do Large Language Models Work?

How do LLMs use these repositories to craft their answers? The first step is to analyze the data using a process called deep learning.

We said that large language models are fed by large volumes of data. As the name suggests, at the heart of an LLM is the size of the dataset it is trained on. However, the definition of “big” is growing with artificial intelligence. Large language models are now typically trained on datasets large enough to include nearly everything written on the Internet over a wide period of time.

Such large amounts of text are fed into the AI algorithm using unsupervised learning; At this stage, a model is given a dataset without explicit instructions on what to do with it. Thanks to this method, a large language model can learn not only words, but also the relationships between words and the concepts behind them.

We can compare large models to a child starting to speak and learn. Thanks to artificial intelligence algorithms and the information provided, the model is gradually growing. For another example, a person who is fluent in the language he speaks can predict what may come next in a sentence or paragraph. He can even find new words and concepts himself. Likewise, a large language model can use its knowledge to predict and generate content.

Deep learning is used to identify the patterns and nuances of human language. Why? for understanding grammar and syntax. But more importantly, contexts are also involved. Understanding contexts, i.e. making sense of things, is a very important part of LLMs.

For example, a single word can have more than one meaning in many different languages. Artificial intelligence models can accurately perceive what we want to say by making connections with other words and sentences. As you can imagine, the models are not foolproof. In such cases, we may need to provide additional information and words to get the answer we want.

A technique called natural language generation (NLG) was developed to generate large language models and responses. With NLG, input is examined and patterns learned from the data pool are used to create a contextually accurate and relevant response.

LLMs, on the other hand, go deeper than this, being able to tailor responses to match the emotional tone of the input. This dual method, when combined with contextual understanding, is the key enabler of large language models to generate human-like responses.

To summarize, LLMs use a large text database with a combination of deep learning and NLG techniques to generate human-like responses to your prompts. But naturally there are some limitations.

Limitations

The advanced models represent a great technological achievement, but naturally they are not perfect and there are still many limitations:

- Contextual understanding: We talked about the questions and answers associated with LLMs and their abilities. But they don’t always get this right and often don’t understand the context, sometimes leading to inappropriate or incorrect answers.

- Prejudice: Any bias present in the training data can often also be present in the responses. This includes biases against gender, race, geography and culture.

- Common sense: Common sense is hard to measure, and people learn it from a young age just by watching the world around them. LLMs, on the other hand, do not have such natural experience. They can only understand what is provided to them through training data, and this does not provide them with true meaning of the world they inhabit.

- LLMs are as successful as their educational data: Accuracy can never be guaranteed. “Garbage In, Garbage Out,” an old saying in computer science, summarizes this limitation perfectly: In IT, no matter how correct the logic of a program is, if the input is invalid, the results will be wrong anyway. In summary, LLMs are only as successful as the quality and quantity of training data allow.

- There is an argument that ethical concerns can also be considered a limitation of LLMs, but this topic is beyond the scope of the article.

Why is it important?

Historically, AI models have focused on perception and understanding. In this context, large language models trained on internet-scale datasets with hundreds of billions of parameters have now unlocked the ability of an AI model to generate human-like content.

The models can reliably read, write, code, draw, create, and leverage human creativity to solve the world’s toughest problems. It also has the potential to increase productivity across sectors.

The LLM can be used in a wide variety of fields and situations. For example, an AI system could learn the language of protein sequences to deliver suitable compounds to help scientists develop breakthrough, life-saving vaccines. Or computers can help people do what they do best: be creative, communicate and create.

Even a writer who is stuck at some point can use a large language model to stimulate his creativity. Or a software programmer can increase his productivity by leveraging LLMs to generate code based on natural language descriptions. Long story short, big language models and associated artificial intelligence are poised for big things.

LLM Examples

The continued advancement of AI is now largely supported by LLMs. Therefore, although it is not exactly considered a new technology, language models are of critical importance. In this direction, different parties continue to develop various models.

In June 2020, OpenAI launched GPT-3 as a service, powered by a 175 billion-parameter model that can generate text and code with short written commands. In 2021, NVIDIA and Microsoft developed Megatron-Turing Natural Language Generation 530B, one of the world’s largest models for reading comprehension and natural language inference, making tasks like summarization and content creation easier.

Last year, HuggingFace introduced BLOOM, an open, large language model that can generate text in 46 natural languages and more than a dozen programming languages. Another LLM, Codex, turns text into code for software engineers and other developers. Here’s a brief look at the commonly used LLMs:

GPT

Generative Pre-trained Transformer (GPT) is perhaps the most widely known LLM. GPT-3.5 and then the latest version, GPT-4, were released. GPT-4 is available via ChatGPT Plus subscription. Additionally, Microsoft also uses the latest version of the Bing Chat platform.

LaMDA & Gemini

This is the first major language model used by Google’s AI chatbot, Google Bard. The version in which Bard was initially released was described as the “lite” version of the LLM. It was replaced by the more powerful PaLM iteration of LLM.

Not wanting to fall behind its competitors, Google later introduced Gemini, which came with major changes. In summary, Gemini is a new and powerful artificial intelligence model that can understand not only texts but also images, videos and sounds. It is stated that Gemini, a multi-mode model, can complete complex tasks in mathematics, physics and other fields and understand and produce high-quality codes in various programming languages.

Gemini is trained on Google’s Tensor Processing Units (TPU). It is much more efficient as it is faster and less costly than Google’s previous PaLM solution.

BERT

Bi-directional Encoder Representation from Transformers or BERT is also a widely used model. The bidirectional features of the model distinguish BERT from other LLMs such as GPT.

Let us also remind you that apart from these, more LLMs have been developed and they have sub-branches. As they evolve, complexity, accuracy, and relevance will continue to increase.

LLM and the Future of Artificial Intelligence

Existing algorithms will improve and different language models will appear in the future. With all this, the way we interact with technology will also change in the future. The rapid spread of models such as ChatGPT and Bing Chat is proof of this fact.

It is unlikely that AI will replace you in the workplace in the short term. However, we can see that artificial intelligence will play larger roles in the future. Finally, let’s touch on some LLM developments expected in the future:

- Improved efficiency: Containing hundreds of millions of parameters, LLMs are incredibly resource-hungry. With improvements in hardware and algorithms, models are likely to become efficient in every aspect. Thus, response times will also accelerate.

- Improved contextual awareness: LLMs are self-taught; The more they use it and get feedback, the better it gets. More importantly, this does not require any greater engineering. As technology advances, there will be improvements in language abilities and contextual awareness.

- Specific training for specific tasks: LLMs are generally prepared to answer all questions. But as they evolve and users train them for specific needs, LLMs can play a major role in fields such as medicine, law, finance and education.

- More integration: LLMs can become personal digital assistants. We can be used as virtual assistants, helping you with everything from diet advice to travel advice to handling your correspondence. Just like Siri.